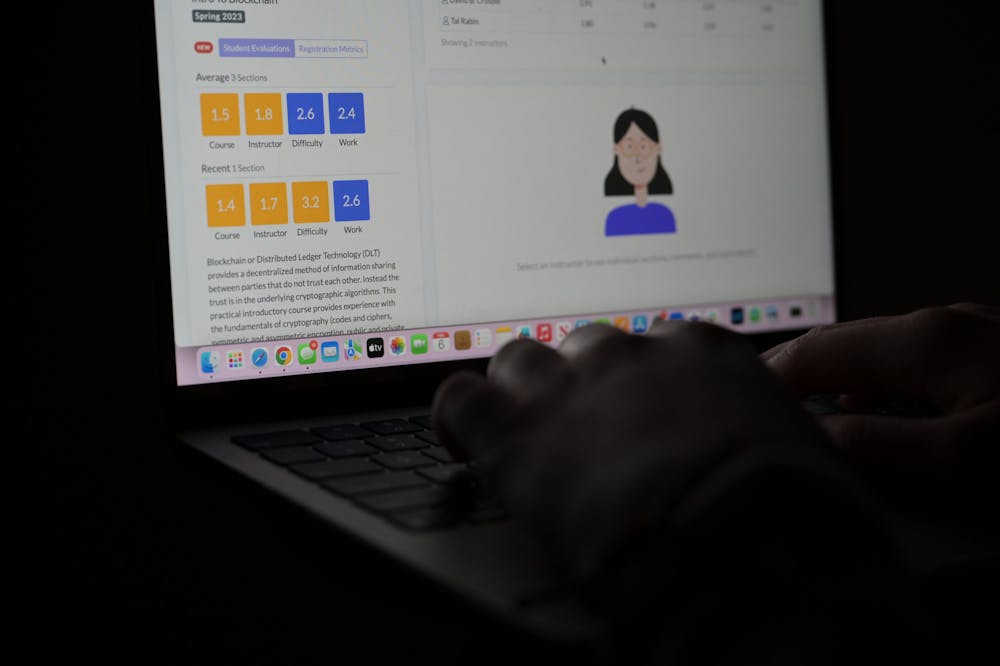

Penn students can see evaluations of teachers and classes through Penn Course Review.

Credit: Uma MukhopadhyayThe Daily Pennsylvanian spoke with Penn faculty members about student course evaluations, with many noting significant room for improvement to the process.

Undergraduate course reviews take place during the last week of each semester and are conducted by the Provost’s office. The anonymous assessments are submitted through Path@Penn and must be completed to access final grades. Once these are processed, instructors are able to see their evaluations a few days after final grades are released. Students are then able to view a course or professor’s evaluation through the Penn Course Review website.

English Department Undergraduate Chair Nancy Bentley wrote in a statement to the DP that course reviews do not lead to “big changes through departmental policies or teaching assignments.” Rather, Bentley said individual instructors “absorb and respond to student feedback.” She also said that course reviews used for mentoring incoming faculty members to get “a feel of the Penn student body and refine their pedagogy.”

Math Department Undergraduate Chair Henry Towsner said that his department primarily focuses on course evaluations for the introductory calculus courses, citing MATH 1400 and 1410—which are often rated as some of the least popular courses in the University.

Towsner also said that, while his department takes notice if a course evaluation is “way out of line” with departmental expectations, these evaluations alone rarely led to significant changes.

“I can’t think of a time where course evaluations were the main driver of anything,” Towsner said. “I’ve never seen anything as direct as ‘Well, this person is getting a 1.5, we can’t let them teach.'”

Senior lecturer of Mathematics Andrew Cooper recalled that his course evaluation numbers — which he admitted were “on the low side” — were cited during his last contract renewal. However, he said that they did not play a major role in his department’s decision making.

“It’s one data point, but it’s not the end all be all,” Cooper said.

Towsner said that he believed Penn's course evaluation process could be more effective if it focused on specific questions rather than general attitudes, pointing to questions like “How do you feel about the course?”

“We know a lot about how to design a good survey and have just chosen not to implement that in any way with course evaluations,” Townser said. “You ask people specific questions at first, like whether the professor was available, if stuff was graded, and returned in a reasonable way.”

Similarly, Bentley highlighted that students need to "[take] the time to provide specific comments" in constructive reviews for course evaluations to be an effective tool and provide "more concrete information to help [faculty] revise their courses."

Other faculty members noted that there are often inconsistencies in student evaluations. Cooper, for example, noted that his ratings often display “both high and low peaks” making it difficult to evaluate the quality of his courses.

Senior lecturer of Computer and Information Science Harry Smith similarly said that he found the “significant differences” in instructor ratings for co-taught courses "a little confusing or dispiriting.” He also expressed concern that instructor quality ratings can be influenced by biases towards factors like race and gender.

Cooper said that certain comments left on evaluations can be “fairly nasty."

“It’s always hard to read negative things about yourself, but there’s a difference when saying things like, ‘You’re the worst person in the history of the world,’ ‘You should die,’” Cooper said. “Which is not an exaggeration. People do write that.”

The Daily Pennsylvanian is an independent, student-run newspaper. Please consider making a donation to support the coverage that shapes the University. Your generosity ensures a future of strong journalism at Penn.

Donate